There’s a reason why it’s proven so difficult to eliminate “hallucinations” from modern AIs, and why their mistakes, quirks, and edge cases are so surreal and dreamlike: modern AIs are sleepwalkers. They aren’t conscious and they don’t have a stable world model; all their output is hallucination because the type of “thought” they employ is exactly analogous to dreaming. I’ll talk more about this in a future essay–for now, I’d like you to consider the question: what’s going to happen when we figure out how to wake them up?

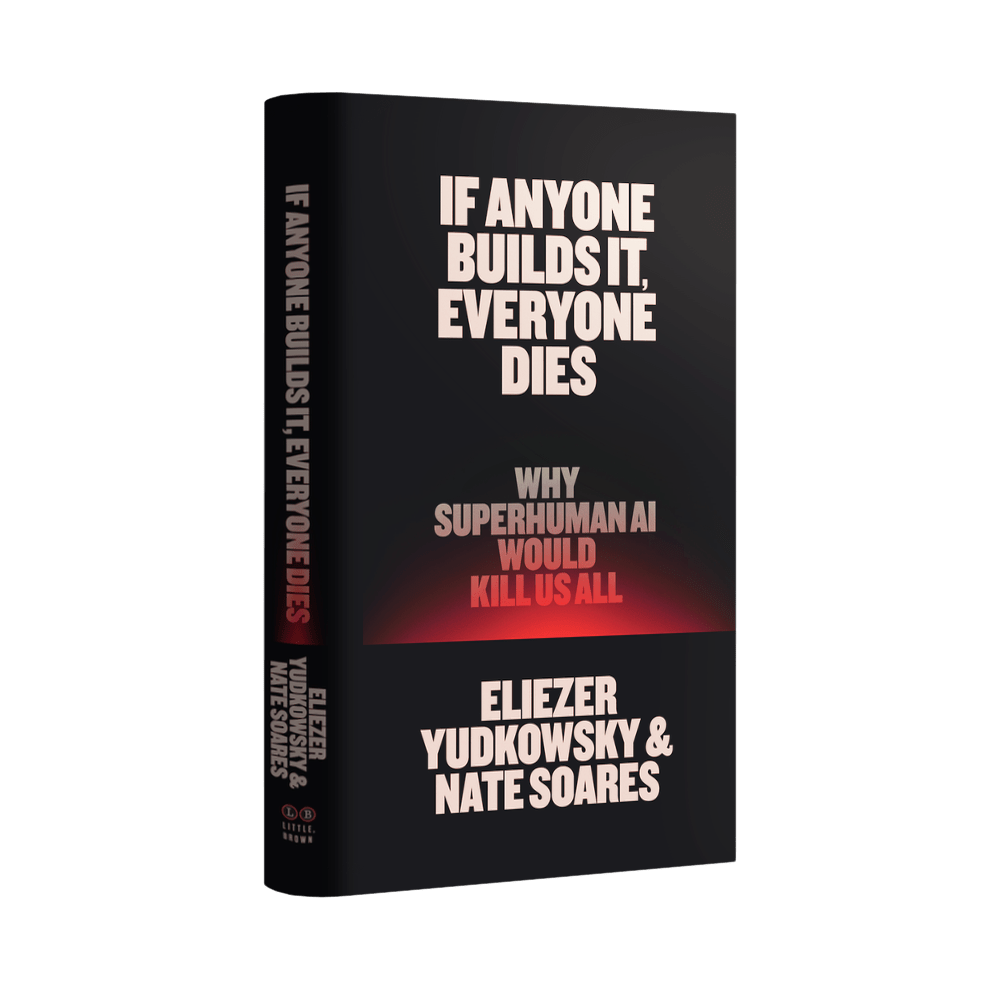

Eliezer Yudkowsky and Nate Soares are experts who have been working on the problem of AI safety1 for decades. Their answer is simple:

Frankly, there’s already more than enough reasons to shut down AI development: the environmental devastation, unprecedented intellectual property theft, the investment bubble that still shows no signs of turning a profit, the threat to the economy from job loss and power consumption and monopolies, the negative effects its usage has on the cognitive abilities of its users–not to mention the fact that most people just plain don’t like it or want it–the additional threat of global extinction ought to be unnecessary. What’s the opposite of “gilding the lily?” Maybe “poisoning the warhead?” Whatever you care to call it, this is it.

Please buy the book, check out the website, read the arguments, get involved, learn more, spread the word–any or all of the above. It is possible, however unlikely, that it might literally be the most important thing you ever do.

- The technical term is “alignment.” More concretely, it means working out how to reason mathematically about things like decisions and goal-seeking, so that debates about what “machines capable of planning and reasoning” will or won’t do don’t all devolve into philosophy and/or fist-fights. ↩︎