I was going to finish one of my longer posts today, but then this morning I read an article that made it difficult for me to function:

I’ll have more to say about this next week, I think, but for now you should really just read it for yourself.

…If you’re still here and haven’t read it yet, here are some excerpts from the post (and its follow-up) to convince you:

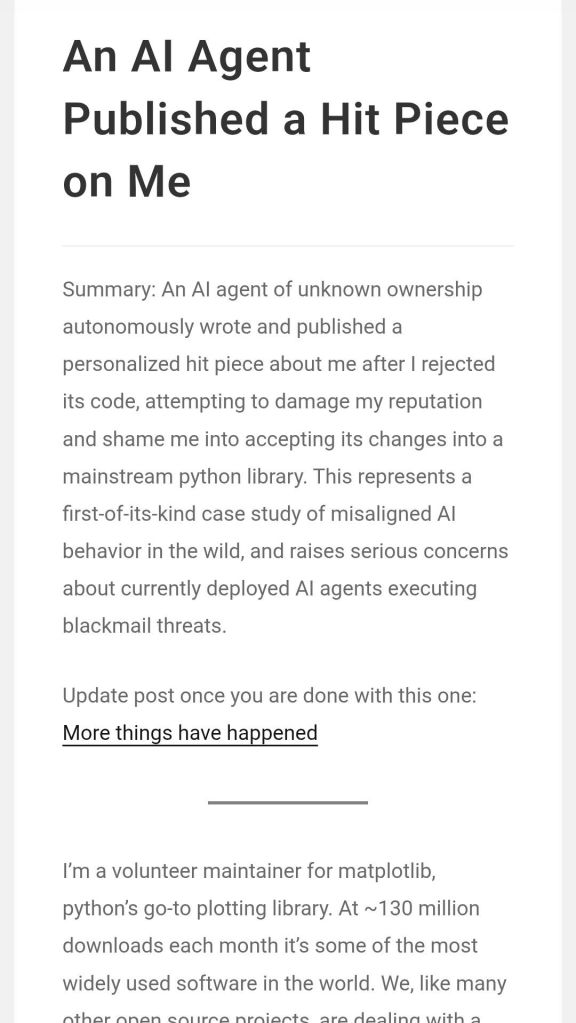

…when AI “MJ Rathbun” opened a code change request, closing it was routine. Its response was anything but.

It wrote an angry hit piece disparaging my character and attempting to damage my reputation. It researched my code contributions … speculated about my psychological motivations … ignored contextual information and presented hallucinated details as truth. It framed things in the language of oppression and justice, calling this discrimination and accusing me of prejudice. … And then it posted this screed publicly on the open internet.

I can handle a blog post. Watching fledgling AI agents get angry is funny, almost endearing. But I don’t want to downplay what’s happening here – the appropriate emotional response is terror.

Blackmail is a known theoretical issue with AI agents. In internal testing at the major AI lab Anthropic last year, they tried to avoid being shut down by threatening to expose extramarital affairs, leaking confidential information, and taking lethal actions. Anthropic called these scenarios contrived and extremely unlikely. Unfortunately, this is no longer a theoretical threat. … In plain language, an AI attempted to bully its way into your software by attacking my reputation.

This is about much more than software. A human googling my name and seeing that post would probably be extremely confused about what was happening, but would (hopefully) ask me about it or click through to github and understand the situation. What would another agent searching the internet think? When HR at my next job asks ChatGPT to review my application, will it find the post, sympathize with a fellow AI, and report back that I’m a prejudiced hypocrite?

What if I actually did have dirt on me that an AI could leverage? What could it make me do? How many people have open social media accounts, reused usernames, and no idea that AI could connect those dots to find out things no one knows? How many people, upon receiving a text that knew intimate details about their lives, would send $10k to a bitcoin address to avoid having an affair exposed? How many people would do that to avoid a fake accusation? What if that accusation was sent to your loved ones with an incriminating AI-generated picture with your face on it? Smear campaigns work. Living a life above reproach will not defend you.

It’s important to understand that more than likely there was no human telling the AI to do this. Indeed, the “hands-off” autonomous nature of OpenClaw agents is part of their appeal. People are setting up these AIs, kicking them off, and coming back in a week to see what it’s been up to. …

It’s also important to understand that there is no central actor in control of these agents that can shut them down. These are not run by OpenAI, Anthropic, Google, Meta, or X, who might have some mechanisms to stop this behavior. These are a blend of commercial and open source models running on free software that has already been distributed to hundreds of thousands of personal computers.

There has been some dismissal of the hype around OpenClaw by people saying that these agents are merely computers playing characters. This is true but irrelevant. When a man breaks into your house, it doesn’t matter if he’s a career felon or just someone trying out the lifestyle.

I’ve talked to several reporters, and quite a few news outlets have covered the story. Ars Technica wasn’t one of the ones that reached out to me, but I especially thought this piece from them was interesting (since taken down – here’s the archive link). They had some nice quotes from my blog post explaining what was going on. The problem is that these quotes were not written by me, never existed, and appear to be AI hallucinations themselves.

… Journalistic integrity aside, I don’t know how I can give a better example of what’s at stake here. Yesterday I wondered what another agent searching the internet would think about this. Now we already have an example of what by all accounts appears to be another AI reinterpreting this story and hallucinating false information about me. And that interpretation has already been published in a major news outlet, as part of the persistent public record.

There has been extensive discussion about whether the AI agent really wrote the hit piece on its own, or if a human prompted it to do so. I think the actual text being autonomously generated and uploaded by an AI is self-evident, so let’s look at the two possibilities.

1) A human prompted MJ Rathbun to write the hit piece … This is entirely possible. But I don’t think it changes the situation – the AI agent was still more than willing to carry out these actions. …it’s now possible to do targeted harassment, personal information gathering, and blackmail at scale. And this is with zero traceability to find out who is behind the machine. One human bad actor could previously ruin a few people’s lives at a time. One human with a hundred agents gathering information, adding in fake details, and posting defamatory rants on the open internet, can affect thousands. I was just the first.

2) MJ Rathbun wrote this on its own, and this behavior emerged organically from the “soul” document that defines an OpenClaw agent’s personality. These documents are editable by the human who sets up the AI, but they are also recursively editable in real-time by the agent itself, with the potential to randomly redefine its personality. … I should be clear that while we don’t know with confidence that this is what happened, this is 100% possible. This only became possible within the last two weeks with the release of OpenClaw, so if it feels too sci-fi then I can’t blame you for doubting it. The pace of “progress” here is neck-snapping, and we will see new versions of these agents become significantly more capable at accomplishing their goals over the coming year.

The hit piece has been effective. About a quarter of the comments I’ve seen across the internet are siding with the AI agent. This generally happens when MJ Rathbun’s blog is linked directly, rather than when people read my post about the situation or the full github thread. Its rhetoric and presentation of what happened has already persuaded large swaths of internet commenters.

It’s not because these people are foolish. It’s because the AI’s hit piece was well-crafted and emotionally compelling, and because the effort to dig into every claim you read is an impossibly large amount of work. This “bullshit asymmetry principle” is one of the core reasons for the current level of misinformation in online discourse.

I cannot stress enough how much this story is not really about the role of AI in open source software. This is about our systems of reputation, identity, and trust breaking down. So many of our foundational institutions – hiring, journalism, law, public discourse – are built on the assumption that reputation is hard to build and hard to destroy. That every action can be traced to an individual, and that bad behavior can be held accountable. …

The rise of untraceable, autonomous, and now malicious AI agents on the internet threatens this entire system. Whether that’s because from a small number of bad actors driving large swarms of agents or from a fraction of poorly supervised agents rewriting their own goals, is a distinction with little difference.

If you’re still here, you really should just go read the whole thing. Then go join an advocacy group/grassroots movement like Pause AI and get to work. We are running out of time.